This Data Privacy does not exist

Reality exists in the human mind, and nowhere else - George Orwell

Reality is that we’re told in many cases our data is anonymized, but recently there’s been discoveries around how a person in an anonymized (which itself doesn’t seem to be the right word here, anyways) data can be re-identified.

If you are from US or UK, you can take part in the outcome of the research to see how well their model can identify you.

Using our model, we find that 99.98% of Americans would be correctly re-identified in any dataset using 15 demographic attributes.

According to our model, based on these 3 attributes, you would have 75% chance of being correctly identified in any anonymized dataset

— Researchers (Luc Rocher, Julien M. Hendrickx & Yves-Alexandre de Montjoye)

While some are arguing that this anonymization should be called Pseudonymization , some say this data is just de-identified or depersonalized.

Whatever it should be called, It’s become more obvious that in this age of this x does not exist, this real privacy too does not exist or perhaps, the reality of privacy is not so real.

You never know what these large organizations do with our data or if all their talk about privacy is real for sure. A recent report broke that Apple’s Siri records fights, doctor’s appointments, and sex (and contractors hear it) after a report few months ago that Amazon reportedly employs thousands of people to listen to your Alexa conversations. Before you think of hailing Google Home, Yep, human workers are listening to recordings from Google Assistant, too.

To make things more paranoiac, The Washington Post reported, As many as 4 million people have Web browser extensions that sell their every click. And that’s just the tip of the iceberg.

Meanwhile, As a Data Scientist, This is also a very nice time to remind ourselves with Apple’s Differential Privacy and Google’s Federated Learning. More importantly, Ethics + Data science.

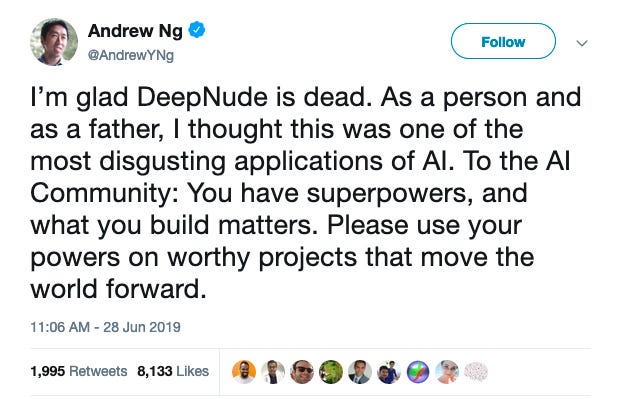

Talking about Ethics in ML is one thing and in the same world where Deep Learning is being used to undress women which is nothing lesser than big shame yet some defending it and crying out loud when its github repo was taken down as an insult to the freedom of speech. Thanks to Andrew Ng, a powerful voice reiterating the community’s ethics.

My apologies, if you opened your mail expecting a bunch of Machine Learning algorithmic discussions but this edition bored you with Data + Privacy + Ethics which in my opinion is more advanced than the technological advancements and important for DS / ML / AI doing good to the society than anything harmful - which is also why OpenAI was founded where Microsoft recently announced an $1 Billion investment.

If you think this is a worthy discussion, Please forward this email to your Data friends and share it with your network and help this reach more curious minds.

If you have suggestions for me, reply to this mail or message me on Linkedin. Ask your friends to sign up / read at https://nulldata.substack.com/.

Thank you,

Abdul Majed Raja